Photographers have experimented with panoramic cameras since the Daguerreotype. Panoramas provide a fresh perspective on landscape that is both disconcerting (places and structures appear to assume different and unfamiliar relationships to each other in a panorama) and yet familiarly complete (the whole landscape is there as we might see it when we change our view by turning on the spot). Static panoramic photographs are also strangely beautiful, and often form striking artistic impressions of land and cityscapes.

Moving panoramas have become fashionable on the web (or perhaps have come and gone in fashion) as digital photography and software such as Apple's Quicktime VR supported their creation and display. Google Streetview is the ultimate example of this, and assumes a strange beauty when deconstructed to it constituent images.

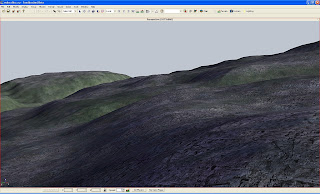

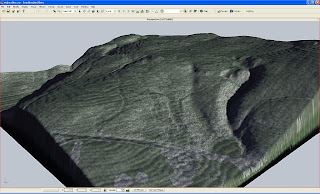

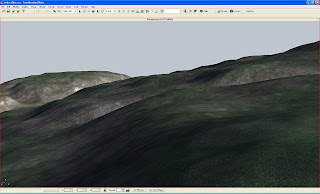

Games-based visualisations are at first glance locked to the fixed, first-person viewpoint of the avatar, a naturalistic, view, but limited in its artistic pretensions...

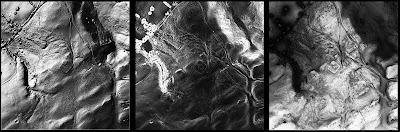

Following on from playing with the camera in CryEngine to simulate the effects of changing lenses on a 35mm camera, it struck me that combining a series of static "photographs" of the landscape using appropriate software (I used the free, and rather wonderful Autostitch) would enable the creation of static panoramic images. So here are two, of Laxton Castle. These are 360 degree panoramas each created from approximately 30 "photographs" of the landscape from a fixed location, but each with a different view. The results are quite pleasing and provide a different perspective on landscape, escaping from the avatar, as it were.